The largest AI labs and tech giants have agreed to put their models through independent security and vulnerability testing before they go live. The voluntary agreement was part of a wider deal with the US government on improving AI safety and alignment. It has been signed by seven leading AI companies including Amazon, Anthropic, Inflection, Google, Meta, Microsoft and OpenAI.

The White House agreement holds the AI labs to a set of voluntary AI safety commitments, including watermarking generated content and testing output for misinformation risk. As well as tests and security measures, the companies will have to share information on ways to reduce risk and invest in cybersecurity measures.

The new agreement is seen as an early move by the Biden administration to regulate the development of AI in the US. There are calls from other American lawmakers to introduce EU-style comprehensive legislation, but so far nothing concrete is on the table. The White House said in a statement: “The Biden-Harris Administration is currently developing an executive order and will pursue bipartisan legislation to help America lead the way in responsible innovation.”

Published as a Fact Sheet on the White House website, it holds seven of the largest AI companies to internal and external security testing before release. They also agree to share information across the industry, with the government and civil society on managing AI risks. It also holds those companies to facilitate third-party discovery and reporting of vulnerabilities. This could come in the form of a bug bounty, such as those run by tech giants like Google.

Each of the seven organisations agreed to begin implementation immediately in work described by the administration as “fundamental to the future of AI”. The White House says the work underscores three important principles for its development – safety, security and trust. “To make the most of AI’s potential, the Biden-Harris Administration is encouraging this industry to uphold the highest standards to ensure that innovation doesn’t come at the expense of Americans’ rights and safety,” the Fact Sheet declares.

Aimed at future AI models

This new agreement on safety primarily applies to future and theoretically more powerful AI models, like GPT-5 from OpenAI and Google’s Gemini. As such, it does not currently apply to existing models GPT-4, Claude 2, PaLM 2 and Titan.

The agreement focuses heavily on the need to earn the public’s trust and includes four areas that signatories need to focus on. This includes the development of a watermarking system to ensure it is clear when content is AI-generated. They also need to report the capabilities, limitations and areas of appropriate and inappropriate use publicly and regularly.

Each of the companies also agreed to prioritise research on the societal risks AI can pose, specifically around harmful bias, discrimination and privacy. “The track record of AI shows the insidiousness and prevalence of these dangers, and the companies commit to rolling out AI that mitigates them,” says the White House fact sheet.

Content from our partners

How tech teams are driving the sustainability agenda across the public sector

Finding value in the hybrid cloud

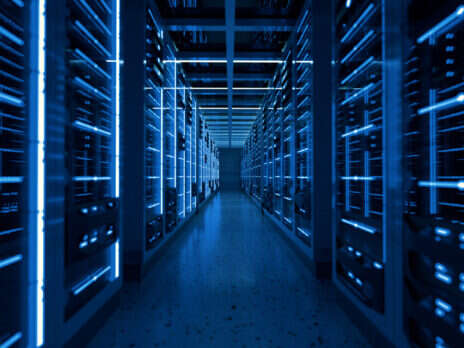

Optimising business value through data centre operations

One of the commitments went beyond protecting from AI and involved the use of AI to address the greatest challenges in society. The White House explained: “From cancer prevention to mitigating climate change to so much in between, AI – if properly managed – can contribute enormously to the prosperity, equality, and security of all.”

View all newsletters Sign up to our newsletters Data, insights and analysis delivered to you By The Tech Monitor team

In less than a year general-purpose artificial intelligence has become one of the most important regulatory topics in technology. Countries around the world are investing and investigating how to best ensure AI is built, deployed and used safely.

The UK government has already secured agreement from OpenAI, Anthropic and Google’s DeepMind to provide early access to models for AI safety researchers. This was announced alongside the new £100m Foundation Model Taskforce chaired by Ian Hogarth.

“Policymakers around the world are considering new laws for highly capable AI systems,” said Anna Makanju, OpenAI’s VP of Global Affairs. “Today’s commitments contribute specific and concrete practices to that ongoing discussion. This announcement is part of our ongoing collaboration with governments, civil society organisations and others around the world to advance AI governance.”

Read more: OpenAI adds persistent personality options to ChatGPT

Topics in this article : AI , White House