OpenAI is being investigated by the US Federal Trade Commission (FTC) over claims the ChatGPT maker has broken consumer protection rules. The agency is concerned the AI lab has put personal reputations and data at risk through its business practices and technology. The investigation highlights the risks to businesses of allowing staff to use generative AI tools, with have the potential to expose valuable and confidential information

The FTC sent OpenAI a 20-page demand for records on how it addresses risks related to its AI models, according to a report in the Washington Post. This includes detailed descriptions of all complaints it has received in relation to its products. It is focused on instances of ChatGPT making false, misleading, disparaging, or harmful statements about real people.

This is just the latest regulatory headache for the AI lab which was suddenly propelled into the spotlight following the surprising success of ChatGPT in November last year. Not long after it was launched Italian regulators banned OpenAI from collecting data in the country until ChatGPT was in compliance with GDPR legislation. It also sparked activity from the European Commission, updating the EU AI Act to reflect the risk of generative AI.

Company founder and CEO Sam Altman has been on a global charm offensive to try and convince regulators around the world to take its perspective on AI regulation into account. This includes putting the spotlight on safety research and advanced AGI, or superintelligence, rather than a direct focus on today’s AI models like GPT-4. It has been reported that OpenAI heavily lobbied the EU to water down regulations in the Bloc’s AI Act.

Globally, governments are wrestling with how to regulate a technology as powerful as generative or foundation model AI. Some are calling for a restriction on the data used to train the models, others, such as the UK Labour Party, are calling for full licensing of anyone developing generative AI. There are others yet, including the UK government, taking a more light-touch approach to regulation.

As well as the potential risk of harm and even libel from generated content being investigated by the FTC, there are also more immediate and fundamental risks to enterprise from employees putting company data on to an open platform like ChatGPT.

FTC filings also request any information on research the company has done on how well consumers understand the “accuracy or reliability of outputs” from their tools. It was likely spurred on to start the investigation following a lawsuit in Georgia where radio talk show host Mark Walters is suing OpenAI for defamation, alleging ChatGPT made up legal claims against him including where he worked and around financial issues.

The FTC wants extensive details on the OpenAI products, how it advertises them and what policies are in place before it releases any new products to the market, as well as times it has held back on releasing a new model due to safety risks in testing.

Content from our partners

Finding value in the hybrid cloud

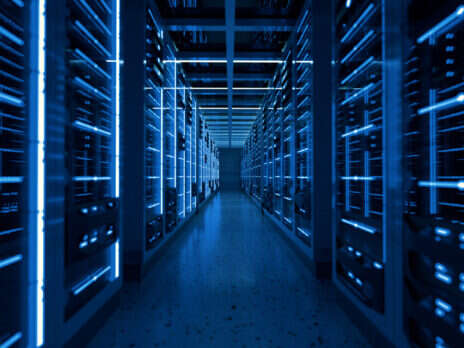

Optimising business value through data centre operations

Why revenue teams need to be empowered through data optimisation

Employees still using ChatGPT despite privacy concerns

Despite myriad security concerns, businesses appear to be slow in recognising the potential risks posed by ChatGPT. A new study from cybersecurity company Kaspersky found that one in three employees have been given no guidance on the use of AI with company data. This presents a real risk as, unless used with an authorised and paid-for enterprise account, or explicitly instructed not to, ChatGPT can reuse any data entered by the user in future training or improvements. This could lead to company data appearing in generated responses for other users that don’t work for that company.

View all newsletters Sign up to our newsletters Data, insights and analysis delivered to you By The Tech Monitor team

In its survey of 1,000 full-time British workers, Kaspersky found that 58% were regularly using ChatGPT to save time on mundane tasks such as summarising long text or meeting notes. This may change in future as meeting software like Teams, Zoom and Slack now have secure instances of foundation models capable of taking and summarising those notes.

Many of those using ChatGPT for work also say they use it for generating fresh content, creating translations and improving texts, which suggests they are inputting sensitive company information. More than 40% also said they don’t verify the accuracy of the output before passing it off as their own work.

“Despite their obvious benefits, we must remember that language model tools such as ChatGPT are still imperfect as they are prone to generating unsubstantiated claims and fabricate information sources,” warned Kaspersky data science lead Vladislav Tushkanov.

“Privacy is also a big concern, as many AI services can re-use user inputs to improve their systems, which can lead to data leaks,” he added. “This is also the case if hackers were to steal users’ credentials (or buy them on the dark web), as they could get access to the potentially sensitive information stored as chat history.”

Sue Daley, director for tech and innovation at techUK, told Tech Monitor that AI can be seen as a supportive tool providing employees with the potential to maximise productivity and streamline mundane activities. She says it can also be used to free up time to focus on high-priority and higher-value tasks for the organisation, but it does come with risks.

“It is crucial that the modern workforce has the skills and knowledge to work and thrive in an AI-driven world of work,” she warns. “Businesses should endeavour to train their employees in the responsible use of AI, to empower them to use this innovative technology and help them to unleash its full potential.”

Read more: UK government approach to AI disadvantages workers – Labour

Topics in this article : AI , ftc , OpenAI